As I have suggested in the past, humans are held responsible for the results of humans using automated systems. Aircraft using semi- and completely autonomous flight systems have crashed, and I cannot think of one instance where pilot error was not listed as the primary cause. (Perhaps the Boeing 737-Max is the exception to the rule.) With algorithmic medicine and decision making, moving from "the lab" to clinical care, and who to sue when things go wrong become relevant questions. So, it should be no surprise that attorneys have done a study of what they consider "prospective jurors," you and me.

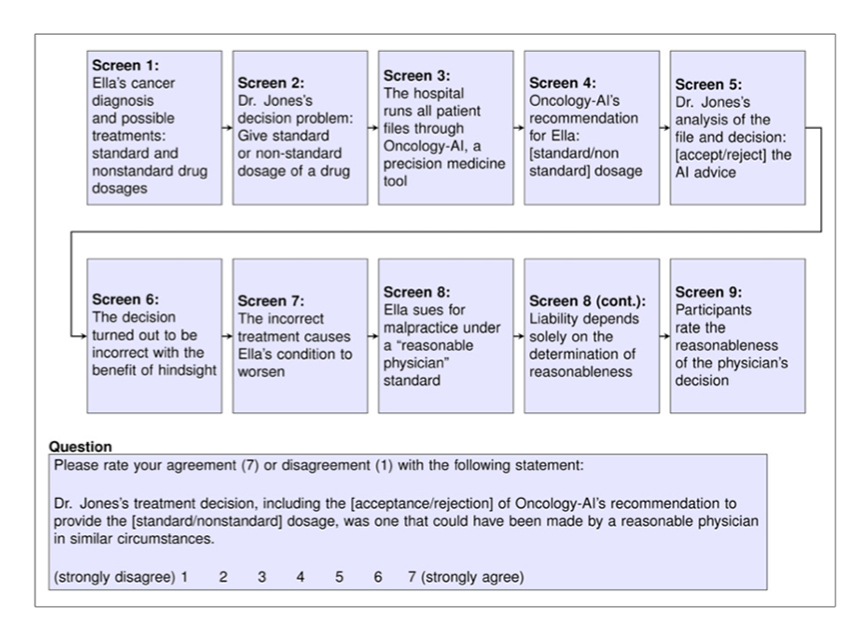

The researchers surveyed the on-line opinions of roughly 2,000 individuals stratified by age, gender, and race; presenting four differing scenarios. In treating a woman with ovarian cancer, the physician must choose a standard dose or unconventional one based on an AI system that has "all the relevant facts and guidelines." No matter what the physician chose, the scenario ends badly for the patient. The question for the participants, was this decision made by a reasonable physician?

Until you spend time in court, you do not realize how many meanings "reasonable" can take. To generalize it is defined in the law as the standard of care an equally fuzzy term meaning how would a reasonable, average physician act. This ambiguous definition of reasonable, standard care is why both sides in a malpractice case bring in experts whose job is to identify unconventional care and whether it deviated from standard practice. Knowing how people judge a physician as reasonable in using artificial intelligence decision aids is vital to a trial attorney - they have their eye on that eventual settlement prize.

The survey participants judged physicians most reasonable when they accepted the computer-selected standard care. They were judged increasingly less reasonable when they accepted a non-standard care recommendation or rejected a non-standard recommendation. When they rejected standard care recommended by the computer, they were deemed the least reasonable.

The survey participants judged physicians most reasonable when they accepted the computer-selected standard care. They were judged increasingly less reasonable when they accepted a non-standard care recommendation or rejected a non-standard recommendation. When they rejected standard care recommended by the computer, they were deemed the least reasonable.

Those prospective jurors participants applied two yardsticks in their determination; whether the care was standard and did the AI system agree. In short, "the algorithm made me do it" does not seem to be a great defense.

For those looking for a silver-lining for AI decision support, the lay jury didn't hold the algorithm as responsible as the physician – once again, the pilot error seems to be where the blame falls. Of course, the decision to implement AI decision making will involve two steps. First, in most instances, these systems will have to be approved by the FDA. This will mark them as "trusted" and provide a shield to the computer wizards behind the algorithm. Second, the decision to implement them will fall to hospital administrators who have the purchasing power.

If the rollout of vaccinations is any measure, the medical staff's opinions of health system planning can and are easily ignored. Physicians may well find a new source of liability in the guise of these decision aids foisted upon them, much like they were given the boat anchor we call electronic medical records to assist in care.

Source: When Does Physician Use of AI Increase Liability? Journal of Nuclear Medicine DOI: 10.2967/jnumed.120.256032