Failed Peer Review

The existence of failed peer review in medical research isn’t new. In 1981, the noted Harvard Epidemiologist, Brian MacMahon, published a “peer-reviewed” study in the prestigious New England Journal of Medicine (NEJM), touting the results of a study claiming coffee causes pancreatic cancer. Pancreatic cancer was then responsible for 20,000 annual deaths, and coffee consumption was possibly responsible “for a substantial proportion of the cases of this disease in the United States.''

According to the New York Times, which publicized the study,

“…coffee drinking has been blamed for many health problems, including ulcers, high blood pressure, heart attacks, gout, birth defects, anxiety and cancers of the stomach and urinary tract….”

The hope was that reducing coffee consumption would improve the health of Americans.

Except the coffee study was flawed. The flaws in the study arose from the outset, in its design as well as in its analysis, which was found to be statistically biased. MacMahon initially defended the study amid much criticism when the results could not be replicated. In good scientific fashion, a critique of MacMahon’s coffee study was published in another peer-reviewed paper in the Journal of Thoracic Disease, although that report largely went unnoticed.

Eventually, in a rather bizarre fashion, he recanted. Reporting on more data, several years later, MacMahon wrote a “Letters to the Editor” to the NEJM, in effect, renouncing his earlier conclusions. Letters to the editor, however, are not peer-reviewed – so the validity of his “retraction” letter is open to question.

As ACSH’s Dr. Henry Miller has pointed out, peer review, lately, isn’t all it was supposed to be, facilitated by the ease of statistical manipulation, which is harder to detect. Even so, watchdog organizations have had hundreds of papers recalled. And statistical manipulation isn’t the only way a study can be compromised. Rank substitution of data, plagiarism, and improper study design are some of the others.

The fact remains that much of our standards of medical care and behavioral choices are predicated on scientific reports, studies, and data, which we expect to be reviewed by honest, anonymous experts in the field of endeavor to lend credence to the research. Not always so, it appears.

The law, too, relies on peer-review

Law students spend months studying the laws of evidence - not everything is admissible for consideration by a jury in determining guilt or liability. Scientific evidence invites even more study - catapulted by the “junk science” epidemic that infected courtrooms in the 1980s and 1990s, which brought down several legitimate businesses, and inspired writers such as Peter Huber and Steve Milloy to call out the practice.

In the wake of the outcry against indiscriminate admission of what was fallaciously called “science,” generally by plaintiff’s lawyers, the Supreme Court handed down the Daubert case in 1993, followed shortly by the Joiner case. (Along with the Kumho Tire case, they are called the Daubert trilogy).

Legal Standards

All federal courts and all but seven state courts follow the Daubert approach enumerated by the Federal Rules of Evidence. Trial judges are charged to act as gatekeepers, vetting the proffered evidence to determine if it meets the standards of sound science before it is admitted for consideration. The Daubert case gave the judges a non-exhaustive, and not compulsory, list of tests, to establish scientific admissibility – including publication in peer-reviewed literature, a criterion which is heavily influential.

Indeed, I can hardly imagine a judge tossing testimony derived from a peer-reviewed study that has not been rejected, retracted, recalled, recanted, or otherwise officially trashed. [1] However, under Daubert studies that have not been published in peer-reviewed literature may be admitted into evidence if they satisfy other assurances of reliability, relevance, and “fit.” [2] Per the Joiner case, experts may no longer opine based on their personal opinion; their testimony must logically flow from established data or studies (generally peer-reviewed ones).

Seven states follow the older Frye standard, handed down by the DC Circuit Court in 1923 when ruling that an early prototype of the lie detector based on blood pressure changes was inadmissible to determine the credibility of a witness. The basis of the court’s ruling was that the device in question wasn’t accepted by the general consensus in the scientific community as being scientifically reliable. [3]

But what constitutes the general consensus of scientific acceptability? Again, peer review is considered tantamount to general acceptance in the scientific community (and here, non-peer-reviewed studies are generally excluded). Under both systems, if, subsequently, the scientific literature is found seriously wanting, rarely would there be a basis of appeal if the evidence was “generally accepted” at the time of its proffer – as evidenced by peer review or other sources.

Here, we see one significant difference between the search for “truth” in law and science; the findings of science are ever-changing and self-policing. Even if there is more plagiarism, data substitution, or fraud in scientific literature than there once was, we remain confident that at some point, the “truth” will be revealed and the line of scientific inquiry straightened. By comparison, the search for truth in law stops at the point of the final appeal. After that, science or facts be damned.

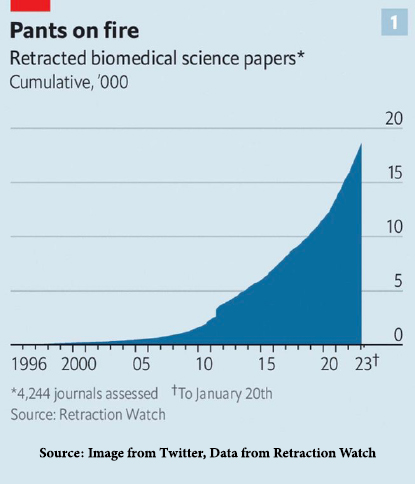

Reliance on peer review as a determinant of valid science was enumerated by the Supreme Court last in 1993 – 30 years ago. During that interim, the increase in fraudulent science published in mainstream scientific literature and touted as peer-reviewed has increased dramatically.

Reliance on peer review as a determinant of valid science was enumerated by the Supreme Court last in 1993 – 30 years ago. During that interim, the increase in fraudulent science published in mainstream scientific literature and touted as peer-reviewed has increased dramatically.

“In 2022, there were about 2600 papers retracted in [biomedical literature]…. – more than twice the number in 2018. Some were the results of honest mistakes, but misconduct of one sort or another is involved in the vast majority of them.” [2]

By comparison, in 1996, the number of such retractions was virtually nil.

According to Retraction Watch data, the 200 authors with the most retractions account for one-quarter of 19,000 retracted papers. According to The Economist, many of these are senior scientists at prominent universities or hospitals, precisely the authorities courts would defer to (and would determine the standard of medical care, which in some cases has turned out to be harmful).

Few have spoken about the motives for such conduct, bringing us back to Brian MacMahon. Indeed, having a more prominent reputation makes it easier to get published, [4] seemingly inviting less scrutiny by reviewers and fostering the process. While some engage in this conduct to beef up their reputation, MacMahon was already an acknowledged expert by the time his coffee study was published. There is no hint he received remuneration for the study. So, why?

No one really knows. But those who devote themselves to uncovering fraudulent publications lament that alerting the journals has little impact.

“Publishers, for their part make profits from publishing more, not from investigating potential retractions. They also fear getting sued by belligerent fraudsters.” [2]

Most reputable scientists and doctors will concede the peer-review system is broken. Perhaps it is time that peer review is removed as a criterion for legally sound science as well. Surely, lawyers should check the retraction status of articles upon which testimony or studies are based, as well as the status of articles or studies cited or relied on in proferred testimony.

[1] This often does not take into account the significant time lag between initial publication and retraction. The mean lag is 28 months.

[2] There is a worrying amount of fraud in medical research The Economist. Print edition entitled Doctored data, Feb. 15, 2023 79-82

[3] Validity and reliability are two distinct concepts in science; the first establishes that the study purports to prove what it sets out to, and the second pertains to its replicability. The concepts are often commingled in law, and reliability is the required specified standard. However, relevance, established in Daubert as a requirement, might be considered a stand-in for validity.

[4] An influential academic safeguard is distorted by status bias The Economist. Print edition entitled Peer pressure, Sept. 17, 2022 68-69