It has been a few centuries now that the powers that be (The Catholic Church) have censored (burned) information about the Earth’s position in relationship to the Sun and placed its controversial and public advocates (Galileo) in spiritual isolation and house arrest. Historians have pointed to more information displacing those misinformed opinions. That assumption, that some form of informational Gresham’s Law, that good information drives out bad information, that more information corrects misinformation, is responsible for many of the electrons found in social media and the Internet in general.

A new study asks whether individuals suffer from the “illusion of information adequacy,” not recognizing that they have insufficient information to make a balanced decision – they do not know what they do not know and need. The theoretical basis for their study is the concept of naïve realism – that we assume our worldview is correct, blissfully unaware of what we do not know, and confidently navigating our world. The illusion of information adequacy is built upon that earlier assumption of an informational calculus where more information, the researchers' unknown unknowns (thank you, Donald Rumsfeld), will alter our views. Let’s see what the research uncovered.

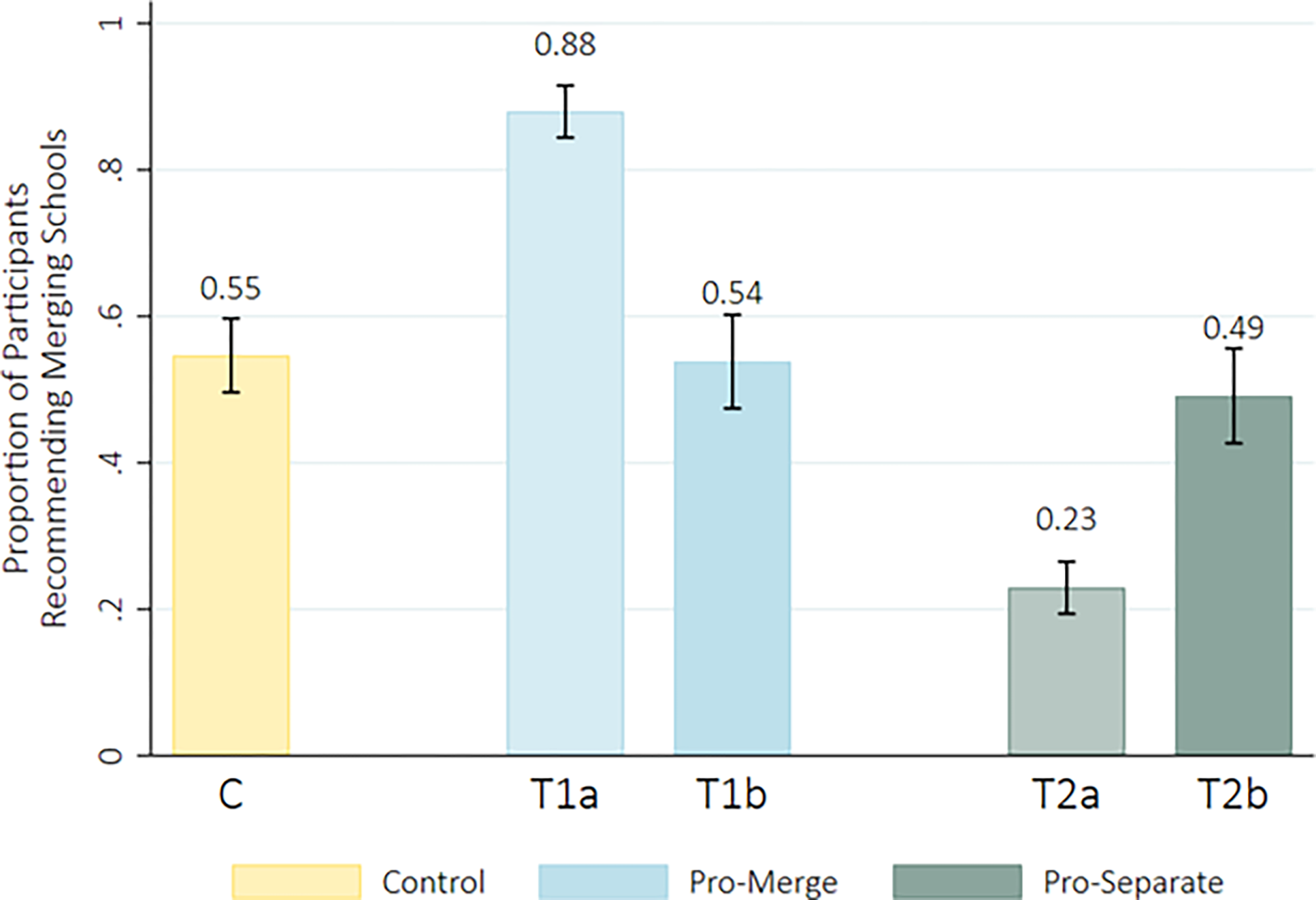

1261 online participants completed a series of surveys, where they were given information regarding a school that had a failing water supply and could remain open, “taking their chances,” or merging with another school, with its own attendant risks. 20% acted as a control and were given both “pro-merge” and “pro-separate” arguments. In the “treatment” arms,” 40% were shown only pro-merge arguments along with a neutral argument, and the remaining 40% only pro-separate and neutral arguments. After answering the survey questions, half of each treatment arm was shown the previously unknown arguments and resurveyed. The participants’ median education level was 3.8 years of college; 60% were male, 71% Caucasian, with a mean age of 39.8

After reading the materials, each group was asked whether they had adequate information to make a decision, whether they were now competent to make that decision, what that decision would be, how confident they were in that choice, and whether other individuals would agree with them. The treatment arm not show the unknown information, were asked if they had the key details of the situation, whether they had other questions or might be curious to know more.

After the usual statistical comparisons, the researchers concluded:

- “People presume that they possess adequate information—even when they may lack half the relevant information or be missing an important point of view….

- They assume a moderately high level of competence to make a fair, careful evaluation of the information in reaching their decisions…

- Their specific cross-section of information strongly influences their recommendations…

- Our participants assumed that most other people would reach the same recommendation that they did.”

No surprises here. But then there is this.

“Contrary to our expectations, although most of the treatment participants who ultimately read the second article and received the full array of information did stick to their original recommendation, the overall final recommendations from those groups became indistinguishable from the control group [supporting] the idea that sharing or pooling of information may lead to agreement.”

But is that support real or wishful thinking? The researchers point out that other studies involving capital punishment found that more information did not move anyone, let alone a third of the individuals they identified. They felt that capital punishment was an issue with “strong prior opinions,” whereas their school scenario did not have those strong priors. I want to offer a word in place of strong that I believe sheds more light – emotional.

I wish to argue that our worldviews are invested with emotional meaning, derived from our desire for kinship with others, a faith in information that is uncertain to others, the joy of moral outrage and indignation that elevates one in relation to others at no cost, and the cost of “cognitive dissonance” when we try to hold two disparate beliefs simultaneously.

“Information sometimes represents reality, and sometimes doesn’t. But it always connects.”

– Yuval Harari

In his latest book, Nexus, Harari points out that our worldview “brings our attention to certain aspects of reality while inevitably ignoring other aspects,” as the researchers demonstrated. But his essential point is that

“Information doesn’t necessarily inform us about things. Rather, it puts things in formation.”

Those formations are part of the emotional meaning we ascribe to our worldviews, and they are why our views on capital punishment are much less susceptible to more information than the researchers' school scenario. One’s worldview is closely tied to one's identity, and this is not some downstream digital effect; it is how we have always viewed information, at least the information that comes with an emotional charge.

In The Science of Communicating Science, Craig Cormick phrases it somewhat differently.

“...for issues that are not emotional or electorally sensitive, there’s a good chance that the science input will count for something. But if the issue is being dominated by emotions and is electorally sensitive in any way – sorry science.”

That, I believe, is why our COVID response and its aftermath have been so destructive to our trust in the activity of science. I am resistant to the researcher’s informational calculus because information’s underlying emotional vibe, Donald Rumsfeld’s known unknown is rarely accounted for when we believe that more information will drive out bad information.

Ultimately, this study confirms what we probably all feared—more data doesn’t necessarily equal better decisions, especially when our emotions pull the strings. Our deeply held beliefs, soaked in emotional investment, aren't budging just because some academics throw more statistics at us. Sometimes, ignorance is less about lack of information and more about clinging to a comforting worldview.

Source: The illusion of information adequacy PLOS DOI: 10.1371/journal.pone.0310216