Chest x-rays are the most common imaging study performed; they serve mostly as a screening tool. Could they act as a crystal ball for our future health? A group of researchers using a form of artificial intelligence, a convolutional neural network [1], believes the answer is yes. The study only seeks to prove that chest x-rays contained hidden prognostic information. Let us see what they got.

- The training images used to develop the algorithm came from a study of smokers and nonsmokers in a long-term study of screening for prostate, lung, colorectal, and ovarian cancers. The test set were participants from that study not used in training, and participants from another study that looked at screening in a higher risk group, patients with more than a 30 pack-year history of smoking.

- The primary outcome was all-cause mortality.

- The study populations were slightly more male, nearly all Caucasian and age around 62. While the follow-up period varied from 6 to 12 years, the number of deaths per 1000 person-years was essentially the same. [2]

- A chest x-ray risk score was developed by the neural network and stratified into four groups of increasing risk.

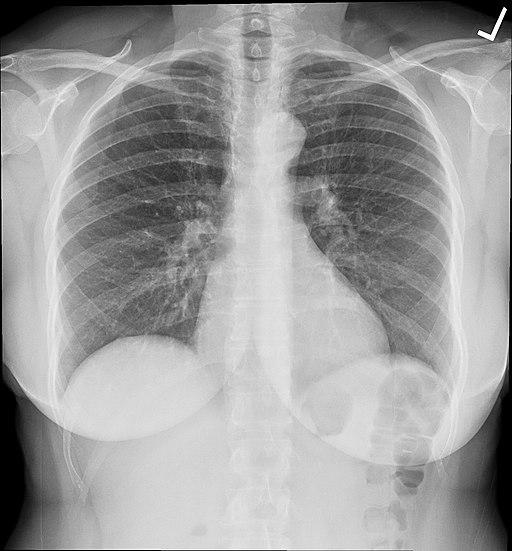

Here are the graphics and the survival curves.

Chest X-rays were predictive of all-cause mortality, roughly 70% of the time (A), slightly lower in the group of dedicated smokers (B). More importantly, the addition of the chest x-ray risk analysis by the neural net improved the predictive value from 50% when based solely on the radiologist’s findings — translated, while a radiologist’s findings resulted in a coin flip chance of predicting mortality, the hidden in plain sight visualizations by the neural network increased performance to 70%.

Is it ready for primetime?

The artificial intelligence was able to stratify the risk of dying better than a radiologist looking at one film, but as the researchers state, the “prognostic value was complementary to the radiologists’ diagnostic findings and standard risk factors…. Our data suggest that deep learning systems can extract prognostic information from existing diagnostic studies.” They suggest that we consider these images as more of a complete summary of our current health than as a screening tool for respiratory disease.

Assuming that is true, what should we do with the information? It is not cause specific; it means either that we proceed with a more intense investigation, treating the finding as a screening test, or that we provide the usual generalizations to our patients, watch what you eat, exercise, don’t smoke. Given the current accuracy of this technique, when used for screening a lot of patients will be found falsely positive, necessitating anxiety, and costs.

There was, ironically, a hidden gift. In working to explain their findings, a continuing weakness of these black-box algorithms, the areas within the chest X-ray used in the machine’s judgments were identified. And while the researchers caution the limitations, it is worthwhile to note the two areas. First, there is imaging information from the lower part of the film, primarily the soft tissue silhouette, which can identify the gender, age and body habitus of the patient – data that radiologists often see but do not feel required to describe as they are already known by the referring physician. [3] Second, there was a host of pathologic data in the silhouette of the heart, the heart itself, and the origin of the aorta from the heart. These areas change in size, shape, and calcification with disease. Again, they are often seen by radiologists who report the findings when the clinical situation requires.

[1] These networks are used to study images and try to model our visual system that feeds the input from our retina back to our visual cortex. Along the way we process the information, looking for edges, shapes, patterns until we “see” what is in front of us. These networks use a form of math to mimic the processing of these visual units to create a larger picture.

[2] For those unfamiliar with the idea of person-years, it considers both the number of participants and how long the follow-up. Thirty individuals followed for ten years is 300 person-years.

[3] During World War II, when X-ray contrast material was difficult to acquire, many radiologists made use of these soft-tissue findings to draw conclusions about underlying diseases; it became an art form. With the end of the war and more contrast, those subtle diagnostic features were lost to newer generations of radiologists, only to be rediscovered by machines.

Source: Deep Learning to Assess Long-Term Mortality from Chest Radiographs JAMA Network Open DOI: 10.1001/jamanetworkopen.2019.7416