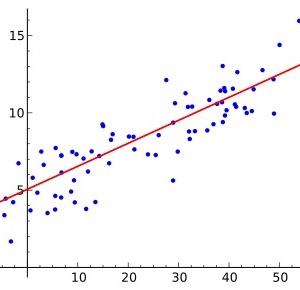

It is oft-repeated that correlation does not imply causation. But it does. That's precisely why epidemiologists and economists are so fascinated by correlations. Thus, it is far more accurate to say that correlation does not prove causation.

There are two major reasons for this. The first is because of confounders, hidden factors that are the true causes of the observed effect. For instance, one might be tempted to conclude that moving to Florida makes people develop Alzheimer's. But this correlation has been confounded by age; in reality, old people both retire to Florida and develop Alzheimer's. The Sunshine State is blameless.

The second reason is dumb luck. Sometimes, two variables correlate for no good reason, such as the number of people who drown in swimming pools and the number of movies featuring Nicholas Cage.

But what if, even after considering confounding and dumb luck, a correlation persists and suggests that a real cause-and-effect relationship exists? When can we say with some certainty that A causes B? In a 1965 address to the Section of Occupational Medicine of the Royal Society of Medicine, epidemiologist Austin Bradford Hill answered that question.

Hill's Criteria of Causality

Hill introduced nine criteria that researchers should consider before declaring that A causes B:

(1) Strength of association. We have never performed a clinical trial for smoking, in which we randomly assigned people to smoke cigarettes. Yet, we know for a fact that smoking causes cancer. Why? Because observational studies have shown that smoking increases a man's risk of lung cancer by 2,300% and a woman's by 700%. That association is so strong, that it cannot be disputed. However, studies that show that A increases the risk of B by merely a few percentage points are far less convincing.

(2) Consistency. Do all or most studies indicate that A causes B? If the experiment is repeated in another country or at another time, are similar data produced? We cannot cherry-pick evidence that supports a causal relationship but ignore evidence that disputes it.

(3) Specificity. If A truly causes B, it beggars belief to argue that A also causes C, D, E, F, and G. We should be suspicious when a single risk factor becomes an all-purpose boogeyman. Consider endocrine disruptors, which have been linked to a myriad of conditions, such as obesity, diabetes, behavioral anomalies, reproductive anomalies, and early puberty. As more diseases are linked to endocrine disruptors, the less believable the argument becomes.

(4) Temporality. If A causes B, then A must also precede B. However, the reverse is not true: Just because A precedes B does not mean A causes B. A good example is the association between drug use and mental illness. While drugs may contribute to mental illness, it is also likely that people who take drugs are doing so to self-medicate against their mental illness.

(5) Biological gradient ("dose-response"). The more a person is exposed to A, the likelier he should be to get disease B. The more cigarettes a person smokes, the likelier he is to get lung cancer. This notion, known as dose-response, presents another challenge to endocrine disruptors. Researchers who contend that endocrine disruptors cause disease say they only do so at low concentrations as opposed to high concentrations (a phenomenon called "hormesis"). While that's theoretically possible, it is also rather difficult to believe.

(6) Plausibility. There should be a reasonable biological mechanism to explain why A causes B. Arguing that vegetable oil will turn girls into lazy, TV-watching diabetics fails this criterion rather spectacularly.

(7) Coherence. A hypothesis that A causes B should make sense in the context of what we already know about A and B. This is why we should blame the pizza, not the box that it comes in, for obesity.

(8) Experiment. For obvious ethical reasons, it is usually not possible to conduct clinical trials to determine disease etiology. However, any data gathered from interventions should support the claim that A causes B. If air pollution causes lung cancer and cardiovascular disease, then a city that passes a law to decrease air pollution should eventually observe fewer cases of lung cancer and cardiovascular disease.

(9) Analogy. We already know that viruses, such as rubella and cytomegalovirus, can cause birth defects. Therefore, it should not be difficult for us to accept that Zika also causes birth defects.

Limits to Hill's Criteria of Causality

It is important to note that these nine criteria should be thought of more as guidelines than a checklist. Quite simply, even if A truly causes B, A still might not meet all nine criteria. On the other hand, a risk factor that meets all nine criteria might just be a confounder rather than a true cause.

As usual, rigid adherence to a list is not an adequate substitute for wisdom and sound judgment.

Source: Austin Bradford Hill. "The Environment and Disease: Association or Causation?" Proc R Soc Med 58 (5): 295–300. Published: May 1965.