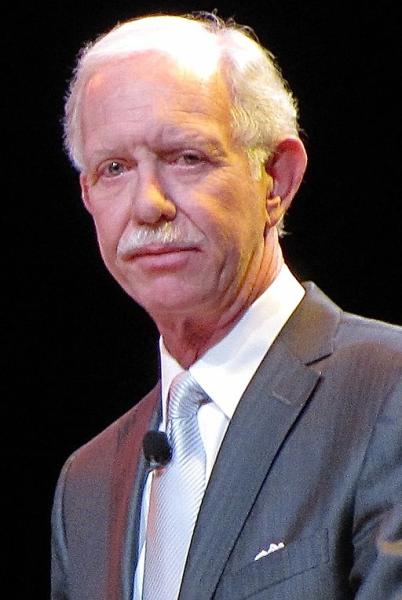

“We do not yet know what challenges the pilots faced or what they were able to do, but everyone who is entrusted with the lives of passengers and crew by being in a pilot seat of an airliner must be armed with the knowledge, skill, experience, and judgment to be able to handle the unexpected and be the absolute master of the aircraft and all its systems, and of the situation,” Captain Sullenberger

Thank you, Captain Sullenberger, for speaking truth to power

While he was talking specifically about Boeing’s current troubles with automated systems and automated design, the remarks are equally applicable to many of the ways Big Automation is impacting other industries. I have written previously about the false promises of the autonomous cars, we won’t be seating in the back watching a movie and as Sullenberger suggests drivers will need to have the “knowledge, skill, experience, and judgment to be able to handle the unexpected.”

My concern lies with my profession as a surgeon-physician. We need to look no further than the increasing discontent with the electronic medical record – a software hoax perpetrated on the government, physician and by extension onto us all. A comprehensive review was recently published by Kaiser Health News. In addition to detailing how these systems are tearing away at the physician-patient relationship, making the physician obligated more to the capturing of data than to talking with the patient it discusses other more hidden issues – disappearing notes, orders correctly entered but not acted upon, and no accountability by the software designers or their corporate overlords.

The technology physicians use daily, hands-on technology, from navigating through the arterial system to place a stent or heart valve, to treating uterine fibroids, aortic aneurysm, and intracranial bleeding without traditional surgery, to writing prescriptions to care for patients with multiple medical conditions, may not be as cutting edge as the 737’s autopilot, but they can be equally complex. They require “the knowledge, skill, experience, and judgment to be able to handle the unexpected and be the absolute master… .”

There are many paths to mastery; I do not believe that medical school is the only source of masters. But the same forces that place a co-pilot with allegedly 200 hours of experience into a cockpit places “mid-level providers” into the office, urgent care centers, and hospitals. And they are masters until they are not, when mid-level is insufficient. But we have checks and balances, right? The physician extenders can consult with their physician colleagues. But while that process does help, it is not a guarantee. Consider the Air France flight lost over the Atlantic, where the co-pilots recognized they needed help too late when the pilot was woken from his sleep and asked to deal with a situation which he didn’t understand. But situational awareness is not easily or consistently summed up and successfully transmitted in a rounding report between physician or nurses.

Artificial intelligence is all the rage, but merely appending the word smart to an object does not make it so. A plethora of devices and their companion studies demonstrate how their conclusions are as good as that of a human – if you follow the instructions and the indications for use. In how many instances will devices be handed to individuals who are ill-trained or not the “absolute master” of the device. And before you say they can be made fool-proof, remember how clever fools really are.

A hospital where I worked required an upgrade to the imaging system of their cath lab. It was a significant purchase and entailed presentations by three big manufacturers of the systems (GE, Siemens, and Philips). As a part of the package, select techs were sent for training on the new software; the physicians were offered an hour. As a result, no one was the master of the new software and imaging continued as it always had. All the upgrades, reducing radiation exposure for the patient and staff, magnifying and perhaps fixing a lesion that might not previously be addressed, were not so much lost but remained unknown. After more than five years of use, how many physicians would claim to be the master of the electronic medical records; or are they just able to easily do their work and never take advantage of what the digital revolution might offer?

The disastrous crashes of the 737 Max are more than a wake-up call for Boeing, or the FAA, or the flying public. It is a message that our mastery of technology lags behind the technology itself. It explains the conundrum of hate speech in social media, our concerns about privacy in online commerce of all types, and why physicians are frustrated, angry and depressed in being required to be the servant to the medical record, not it’s master.

Captain Sullenberger saved 155 lives on US Airways Flight 1549 because he could fly a plane, that plane, without all the bells and whistles. He was the master; the autopilot was the servant. We were so willing to listen to Captain Sullenberger on January 15, 2009. His words at the beginning of this article have the potential to save many more lives if only we will listen.