Americans are no strangers to “times that try men's souls," to borrow a phrase from Thomas Paine. By mid-1945, the United States had been at war for three-and-a-half years, enduring the draft, separation from loved ones, mounting numbers of casualties, and rationing, with no end in sight. Many Americans were weary, not unlike our feelings now, after more than three years of varying degrees of privations and anguish related to the COVID-19 pandemic.

That sense of anxiety and the widespread desire to return to normality brought to mind how WWII was suddenly – and to many, unexpectedly – resolved. August 6th marks one of the United States' most important anniversaries, memorable not only for what happened on this date in 1945 but for what did not happen.

What did happen was that the Enola Gay, an American B-29 Superfortress bomber, dropped Little Boy, a uranium-based atomic bomb, on the Japanese city of Hiroshima. That historic act hastened the end of World War II, which concluded within a week after the August 9 detonation of Fat Man, a plutonium-based bomb, over Nagasaki.

They were the only two nuclear weapons ever used in warfare.

Although they occurred before I was born, I have several personal connections to those events. The first is that when Little Boy was dropped on Hiroshima, my father, a staff sergeant in the U.S. Army infantry who had fought in the Italian campaigns of WWII, was on a troopship, expecting to be deployed to the Pacific theater of operations. (The Axis powers had surrendered three months earlier.) Neither he nor his fellow soldiers relished the prospect of participating in the impending invasion of the Japanese main islands. When the Japanese surrendered on August 14, the ship headed, instead, for Virginia, where the division was disbanded. Dad headed home to Philadelphia, and I was born two years later.

My second connection came to mind recently with all the publicity surrounding the new film, “Oppenheimer,” surrounding the involvement of J. Robert Oppenheimer in the development of the atomic bombs. He was the young theoretical physicist who directed the Los Alamos Laboratory, the nucleus of the Manhattan Project, the military research program that developed the bombs. My once-removed link is that three of my M.I.T. physics professors had participated in the Manhattan Project three decades earlier and sometimes related stories about the experience.

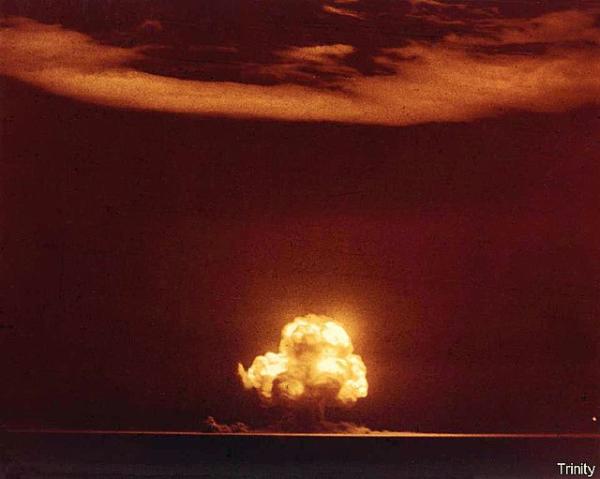

In class, one of them recalled that after the first test explosion, code-named “Trinity,” on July 16, 1945, in Alamogordo, NM, about 200 miles southeast of Los Alamos, he was assigned to drive Army Major General Leslie Groves, the director of the project, to view the result. They arrived to find a crater 1,000 feet in diameter and six feet deep, with the desert sand inside turned by the intense heat into glass – which became known as “trinitite.” Groves' response was, "Is that all?"

J. Robert Oppenheimer (white hat), Maj. Gen. Leslie Groves (to Oppenheimer's left), and other Manhattan Project staff, at Trinity site ground zero after the test of the plutonium bomb, "Gadget." Source: U.S. Army Signal Corp

That wasn’t quite all. The blast was visible up to 160 miles away. Witnesses from as far as Albuquerque and El Paso described a huge fireball and mushroom cloud. The radioactive fallout extended over thousands of square miles.

The first atomic bomb employed in warfare was dropped three weeks later.

Approximately 66,000 are thought to have died in Hiroshima from the acute effects of the Little Boy bomb and about 39,000 in Nagasaki from the Fat Man device. In addition, there was a significant subsequent death toll due to the effects of radiation and wounds.

Shortly after that, the "Was it really necessary?" questions began. The Monday-morning quarterbacks questioned the morality and military necessity of using nuclear weapons on Japanese cities. Even nuclear physicist Leo Szilard, who in 1939 had composed the letter for Albert Einstein's signature that resulted in the formation of the Manhattan Project, characterized the use of the bombs as "one of the greatest blunders of history." Since then, there have been similar periodic eruptions of revisionism, uninformed speculation, political correctness, and historical amnesia.

The historical context and military realities of 1945 are often forgotten when judging whether it was "necessary" for the United States to use nuclear weapons. The Japanese had been the aggressors, launching the war with a sneak attack on Pearl Harbor in 1941 and systematically and flagrantly violating various international agreements and norms by employing biological and chemical warfare, the torture and murder of prisoners of war, and the brutalization of civilians, including forcing them into prostitution and slave labor.

But leaving aside whether our enemy "deserved" to be attacked with the most fearsome weapons ever employed, skeptics are also quick to overlook the "humanitarian" and strategic aspects of the decision to use them.

Operation Downfall Meets A Fork In The Road

As a result of the bombing of Hiroshima and Nagasaki, what did not need to happen was "Operation Downfall" – the massive Allied (primarily American) invasion of the Japanese home islands that was being actively and meticulously planned. As Allied forces closed in on the main islands, the strategies of Japan's senior military leaders ranged from "fighting to the last man" to inflicting heavy enough losses on invading American ground forces that the United States would be forced to agree to a conditional peace. Operation Downfall was being pursued primarily because U.S. strategists knew, from having broken the Japanese military and diplomatic codes, that there was virtually no inclination on the part of the Japanese to surrender unconditionally.

Lastly, because the Allied military planners assumed that "operations in this area will be opposed not only by the available organized military forces of the Empire [of Japan], but also by a fanatically hostile population," astronomical casualties on both sides were thought to be inevitable. The losses between February and June 1945, just from the Allied invasions of the Japanese-held islands of Iwo Jima and Okinawa, were staggering: 18,000 dead and 78,000 wounded. That harrowing experience was accounted for in the planning for the final invasion.

As I discussed this retrospective with a friend who is a retired Marine four-star general, he told me this:

[F]ollowing Okinawa and Iwo Jima, all six Marine divisions were being refitted for the attack on the home islands. None of the divisions had post-assault missions, because the casualty estimates were so high that they would initially be combat inoperable until they were again remanned and refitted. Basically, the Marines were to land six divisions abreast on Honshu, then the Army would pass through for the big fight on the plains inland. (Emphasis added.)

(Note: Each division had approximately 23,000 Marines.)

The general continued:

What made this different was, unlike the Pacific campaign to date other than Guadalcanal back in 1942, this would be the first time the Japanese could reinforce their units. After Guadalcanal, in the fights across the ocean, the U.S. Navy isolated the objectives so the Japanese could not reinforce. The home islands would be a different sort of fight, hence the anticipated heavy casualties.

A study by physicist (and future Nobel Laureate) William Shockley for the War Department in 1945 estimated that the invasion of Japan would have cost 1.7-4 million American casualties, including 400,000-800,000 fatalities, and between 5 and 10 million Japanese deaths.

These fatality estimates were, of course, in addition to the members of the military who had already perished during almost four long years of war; American deaths were already about 292,000. The implications of those numbers are staggering: The invasion of Japan could have resulted in the death of more than twice as many Americans as had already been killed in the European and Pacific theaters of WWII up to that time!

The legacy of Little Boy and Fat Man was in reshaping the course of history: A swift end to the war made Operation Downfall unnecessary and, thereby, prevented millions of deaths.

Some of the largely forgotten problems and near misses of the Manhattan Project are fascinating.

Two months before the Trinity test, U.S. Air Force planes dropped bombs on the Trinity base, mistakenly thinking that the illuminated buildings were the targets for their practice mission:

In the middle of May, on two separate nights in one week, the Air Force mistook the Trinity base for their illuminated target. One bomb fell on the barracks building which housed the carpentry shop, another hit the stables, and a small fire started. Fortunately the barracks occupied by soldiers and civilian scientists were not struck. If the lead plane hit the generator or wires and doused the target lights, then the succeeding planes looked for another illuminated area. This must have been what happened in May of 1945. After all, the crews had come at least a thousand miles to pass their final exam and had probably never been told of anything except targets in the area.

Only four days after the USS Indianapolis delivered the Hiroshima and Nagasaki bombs’ components to Tinian Island on July 26, 1945, a Japanese submarine torpedoed and sank her. And the B-29 Superfortress that dropped the Fat Man plutonium bomb on Nagasaki experienced one glitch after another. Many of them are described in the aptly titled “The harrowing story of the Nagasaki bombing mission.” It's astonishing how much went wrong. Here's a hint of it:

As described in his diary, Schreiber [the scientist entrusted with transporting the plutonium core from Los Alamos to Tinian Island] sat on a hard wooden chair strapped inside the big plane all the way to Tinian. Like everyone working on the Bomb, he was exhausted. So he slept sitting up, sometimes holding the bomb case in his lap. At one point, over the Pacific, he went up to the cockpit to get a better view of what was causing turbulence. One of the crew came up behind and tapped him on the shoulder: “Whatever that thing is you got, it’s rolling around the back of the plane. Maybe you want to corral it.” The wire container had tipped over, the first in a series of mishaps. Schreiber quickly fetched the nation’s most technologically advanced wartime treasure, tied it to the leg of his chair, and went back to sleep.

Mounting Casualties, Both Non-Military And Military

During the years since WWII, much has been made of the moral boundary that was breached by the use of nuclear weapons, but many military historians regard as far more significant the decisions earlier in the war to adopt widespread urban bombing of civilians. This threshold was initially transgressed by Hitler, who attacked English cities in 1940 and 1941, and the practice was later adopted by the Allied forces, which resulted in the devastation of major cities such as Dresden, Hamburg, and Tokyo.

Previously, the bombing focused primarily on military objectives, such as airfields, munitions factories, and oil fields; or critical transportation links, such as train stations and tracks, bridges, and highways. Never before on such a scale had non-military targets been targeted to degrade the morale of the populace. In one instance, over 100,000 were killed in a single night of firebombing of Tokyo, March 9-10, 1945. (The figures are imprecise because many bodies were incinerated and never recovered). More than 22,000 died in Dresden, February 13-15, 1945, and about 20,000 in Hamburg, July 1943.

In an email to me, my former colleague historian Victor Davis Hanson called attention to two factors that made the case for using America's nuclear weapons. First, "thousands of Asians and allied prisoners were dying daily throughout the still-occupied Japanese Empire, and would do so as long as Japan was able to pursue the war."

Second, "Maj. Gen. Curtis LeMay [who was in charge of all strategic air operations against the Japanese home islands] planned to move forces from the Marianas to newly conquered and much closer Okinawa, and the B-29 bombers, likely augmented by European bomber transfers after V-E Day, would have created a gargantuan fire-bombing air force that, with short-distance missions, would have done far more damage than the two nuclear bombs."

In fact, the most destructive bombing raid of the war, and in the history of warfare, was the nighttime fire-bombing of Tokyo on March 9-10, 1945. In three hours, the main bombing force dropped 1,665 tons of incendiary bombs, which caused a firestorm that killed some 100,000 civilians, destroyed a quarter of a million buildings, and incinerated 16 square miles of the city. Tokyo was not the only target: By the war's end, incendiaries dropped by LeMay's bombers had totally or partially consumed 63 Japanese cities, killing half a million people and leaving eight million homeless.

The WWII casualty statistics are numbing, and recall a saying often attributed to Joseph Stalin: a single death is a tragedy, a million deaths is a statistic.

During World War I, Europe had lost most of an entire generation of young men; combatant fatalities alone were approximately 13 million. Memories of that calamity were still fresh three decades later. In 1945, as they deliberated, Allied military planners and political leaders were correct, both strategically and morally, in not wanting to repeat that history. (And Truman, who had succeeded Franklin D. Roosevelt upon the latter's death only four months earlier, would not have wanted his legacy to include causing the unnecessary death of hundreds of thousands of American servicemen.) It was their duty to weigh carefully the costs and benefits for the American people, present and future. Had they been less wise or courageous, my generation of post-war baby boomers would have been much smaller.

These decisions are truly the stuff of history writ large, but governments perform such balancing acts all the time. We saw that in the creation of policies to manage the COVID-19 pandemic. How, for example, do we balance the very real and high costs of lockdowns – businesses obliterated, livelihoods lost, children deprived of education and social stimuli – against increasing numbers of deaths, the persistent, debilitating, post-"recovery" effects of long COVID, the stresses on our healthcare system, and other spinoff impacts on health from COVID-19 infections, if we are too timid with mask and vaccine mandates?

As President Harry Truman found in deciding whether to use Little Boy and Fat Man, sometimes you need to choose the least bad of the alternatives. And to arrive at that, you need to value data and expert analysis over ideology, politics, or vox populi.

I pity the decision-makers.

Note: An earlier version of this article was published by Issues & Insights.